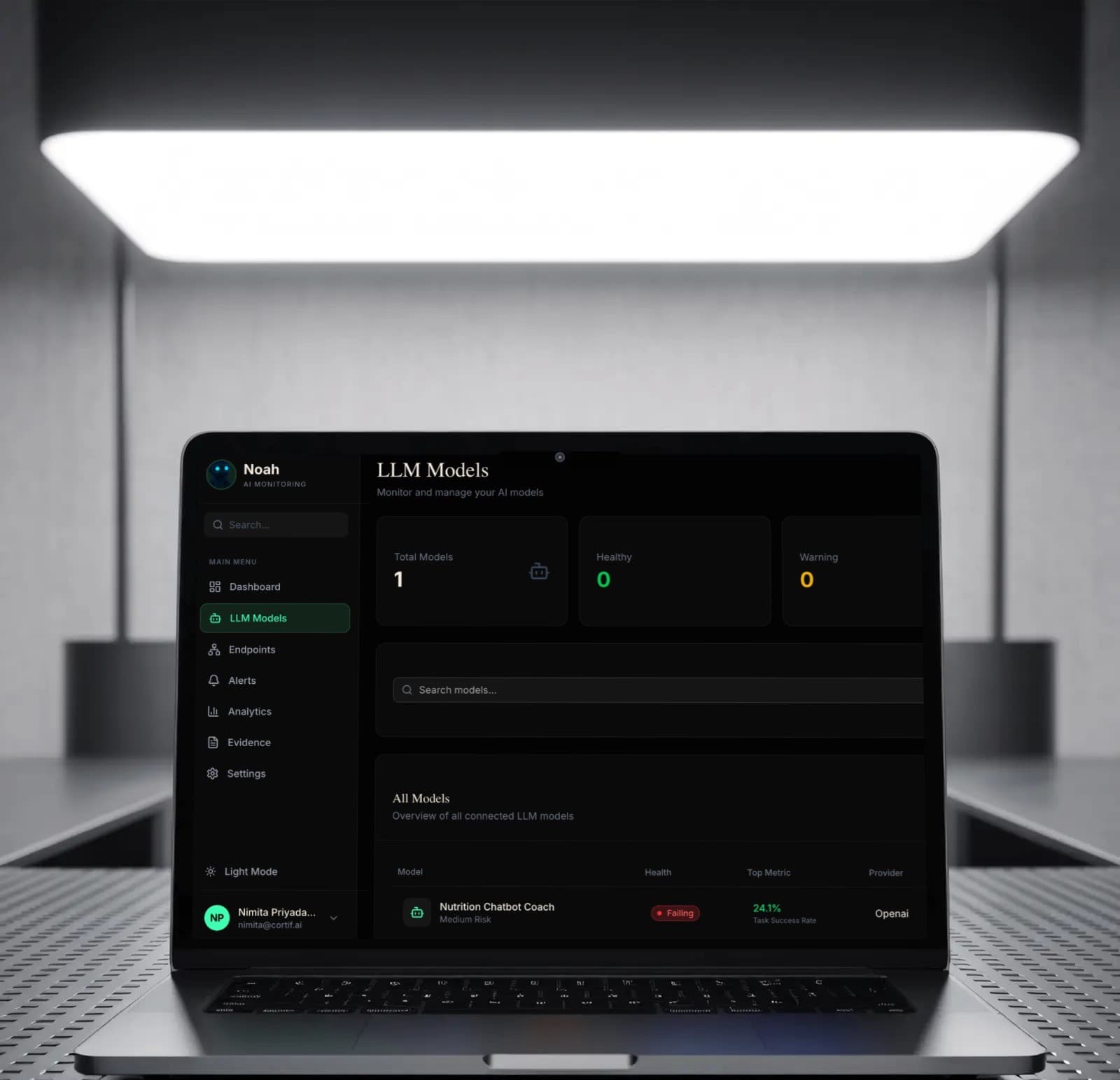

Complete visibility into your LLM stack

Monitor, analyze, and optimize your AI models in real-time. From token usage to sentiment analysis, Noah gives you the insights you need to scale with confidence.

Real-time insights for mission-critical models.

Don’t fly blind. Track latency, throughput, and error rates as they happen. Our dashboard updates instantly, allowing you to catch regressions before your users do.

- ✓Live token usage tracking per request

- ✓P95 and P99 latency monitoring

- ✓Detailed error rate breakdown by model type

Latency (ms)

Input Prompt

“Generate a response regarding the competitor’s pricing model...”Analysis Result

Sentiment: Neutral

PII Detected: None

Topic Drift: High Confidence

Understand what your models are saying.

Go beyond simple metrics. Noah analyzes the semantic content of every interaction to ensure safety, quality, and alignment with your business goals.

- ✓Automated PII detection and reduction

- ✓Sentiment and tone analysis

- ✓Drift detection for long-running conversations

Cost Tracking

Monitor spending across all providers (OpenAI, Anthropic, Mistral, Azure) in a unified view. Set budget alerts to prevent overage.

Robustness Scoring

Automatically test your models against adversarial attacks and edge cases to ensure system integrity.

Agent Noah

Get alerts and daily summaries directly in Slack or Microsoft Teams. Ask questions about your data in natural language.

Version Control

Track prompt versions and model configurations. Roll back instantly if a new deployment degrades performance.

Golden Datasets

Curate and maintain high-quality datasets for evaluation and fine-tuning directly within the platform.

On-premise Options

For strict compliance needs, deploy Noah entirely within your own VPC or on-premise infrastructure.

Ready to optimize your models?

Join engineering teams at leading AI companies who trust Noah for their observability stack.